The Evolution of Data Center Design

Early Mainframe Era (1950s-1970s)

In the mainframe era, a company’s entire compute capacity lived in a single, cavernous room. Power densities were modest—about 100–200 W per square foot—so a few air handlers could keep equipment cool without elaborate backup. The arrival of raised floors solved cable-management headaches and doubled as a basic air plenum, but true redundancy was rare; reliability depended more on meticulous operators than on duplicate gear. Designs therefore concentrated on keeping one central machine online rather than engineering complex fail-over paths.

Early mainframe room

Client-Server & Enterprise Growth (1980s-1990s)

As businesses embraced distributed computing, on-premise enterprise data centers proliferated. The demand for more power required more intelligent cooling strategies, resulting in natural convection cooling and hot-aisle/cold-aisle arrangement. Redundancy standards evolved from basic N to N+1 configurations, reflecting growing demands for uptime and reliability. Power densities climbed to roughly 300–500 W per square foot, with typical rack loads around 4 kW.

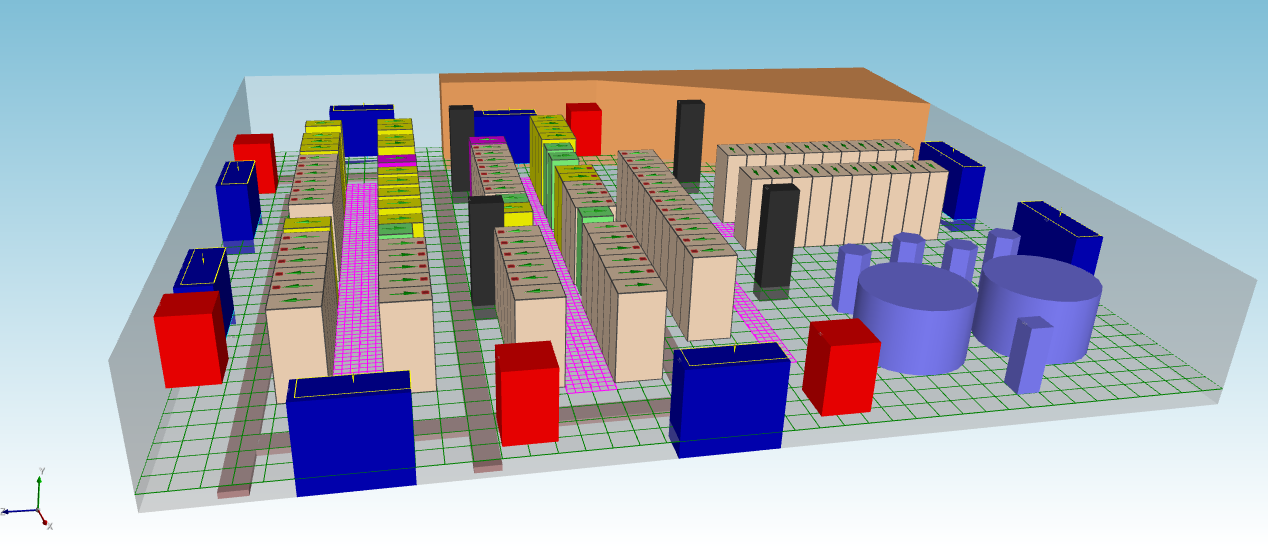

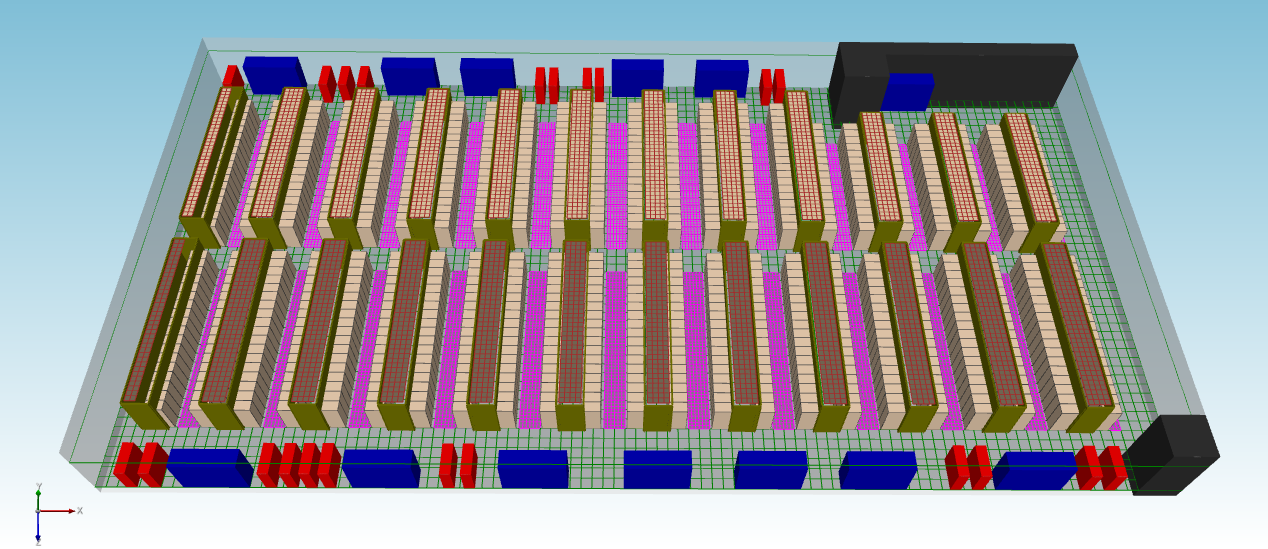

Some of the early challenges of underfloor cooling can be demonstrated through CFD simulation.

Click the images to open an interactive 3D web viewer.

Geometry of a naturally convective data center modeled in CoolSim

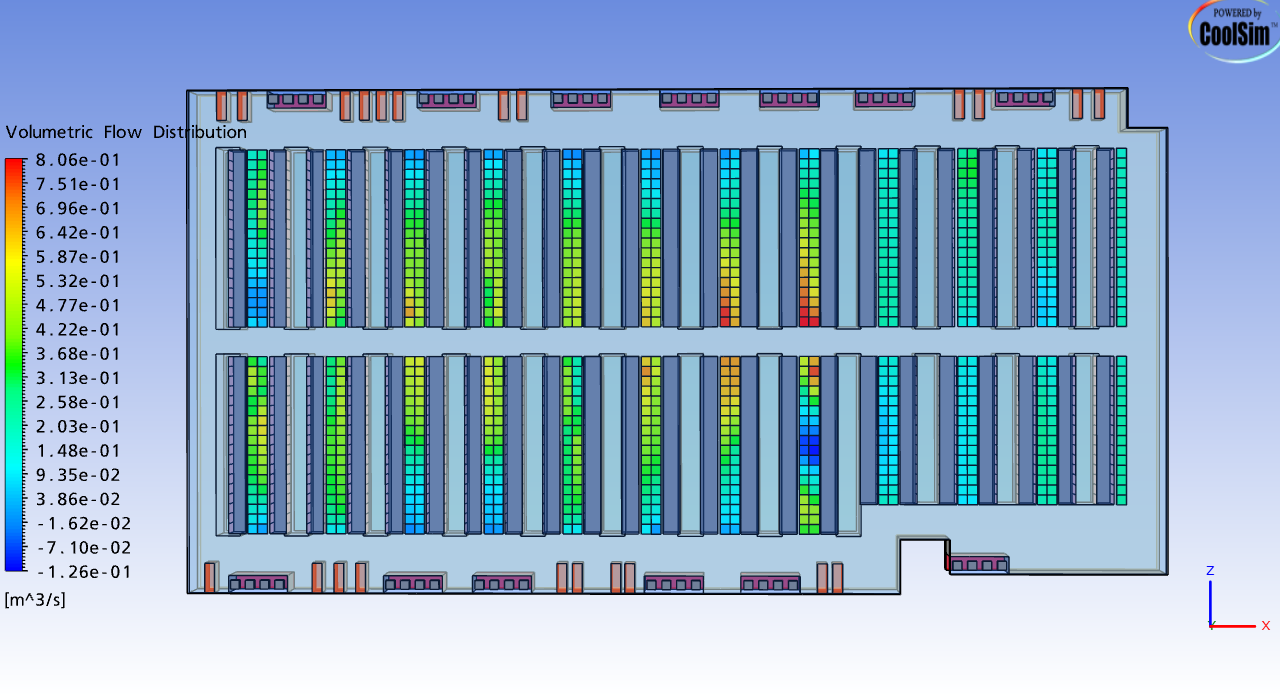

Note the placement of the CRAC units, colored in blue, which surround the room. The high velocity air supplied by the CRACs blows past the outer set of tiles and oversupplies the central tiles, shown below:

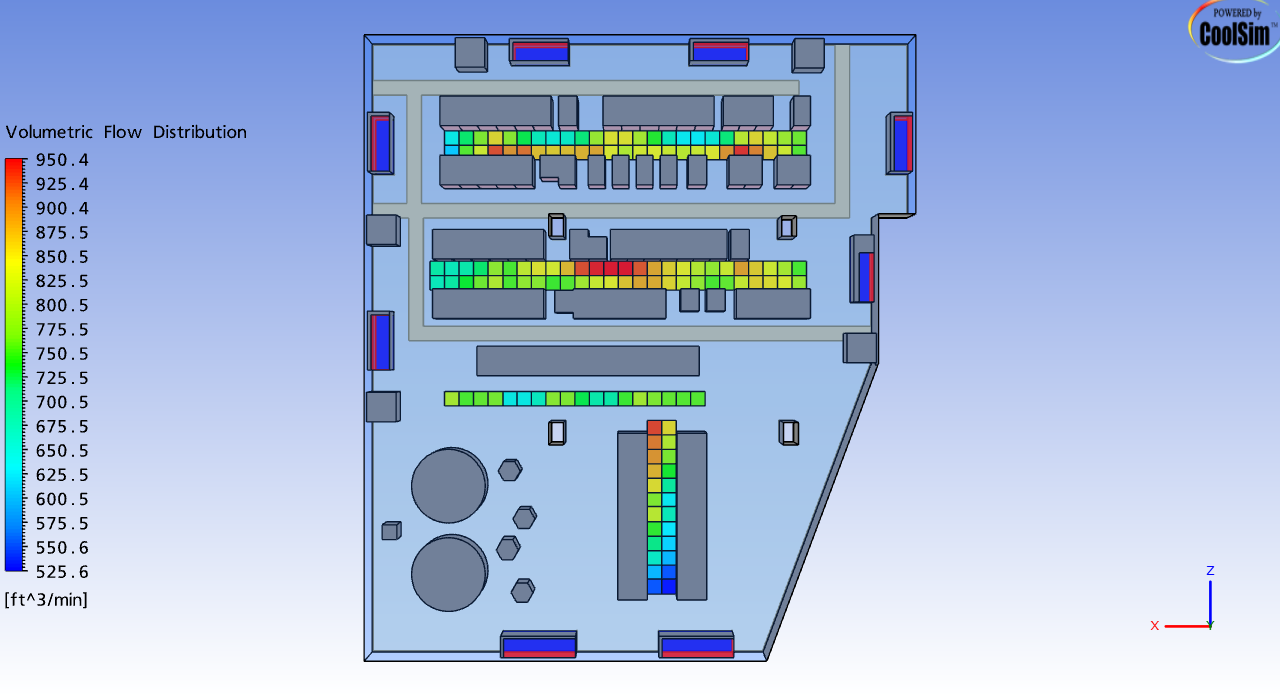

Non-uniform flow distribution through perforated floor tiles

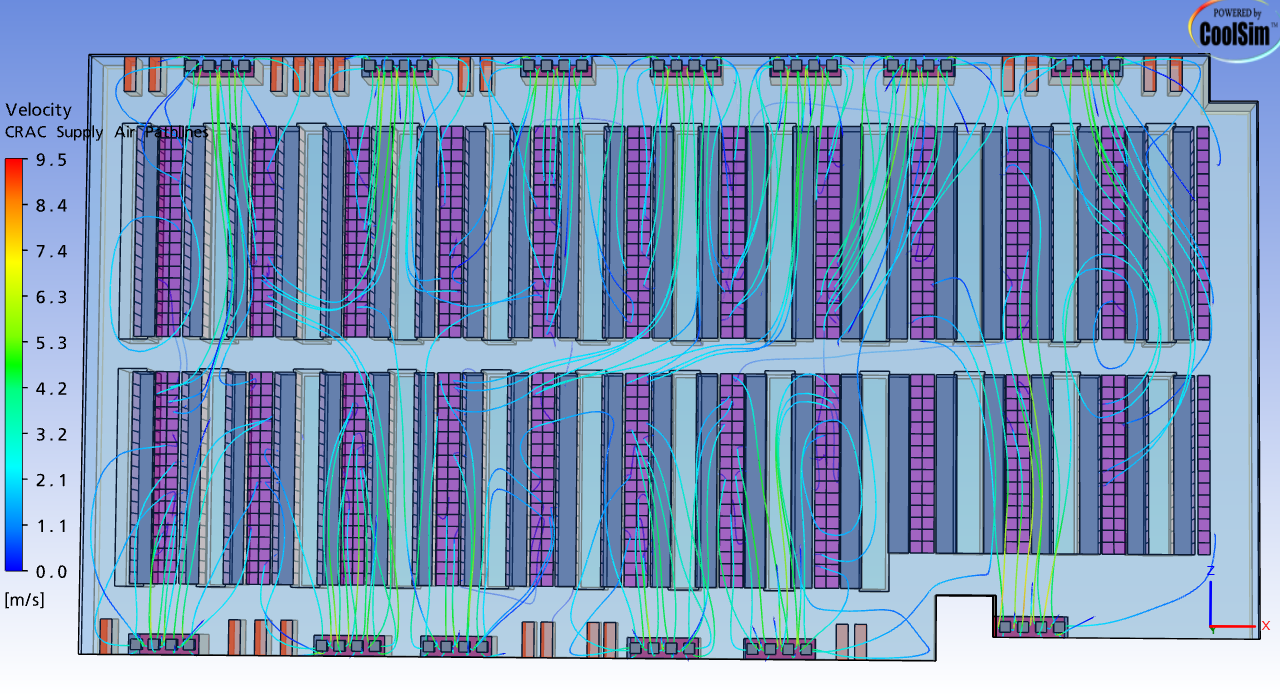

The oversupplied air bypasses the racks and returns to the CRACs without the desired temperature rise, lowering CRAC efficiency:

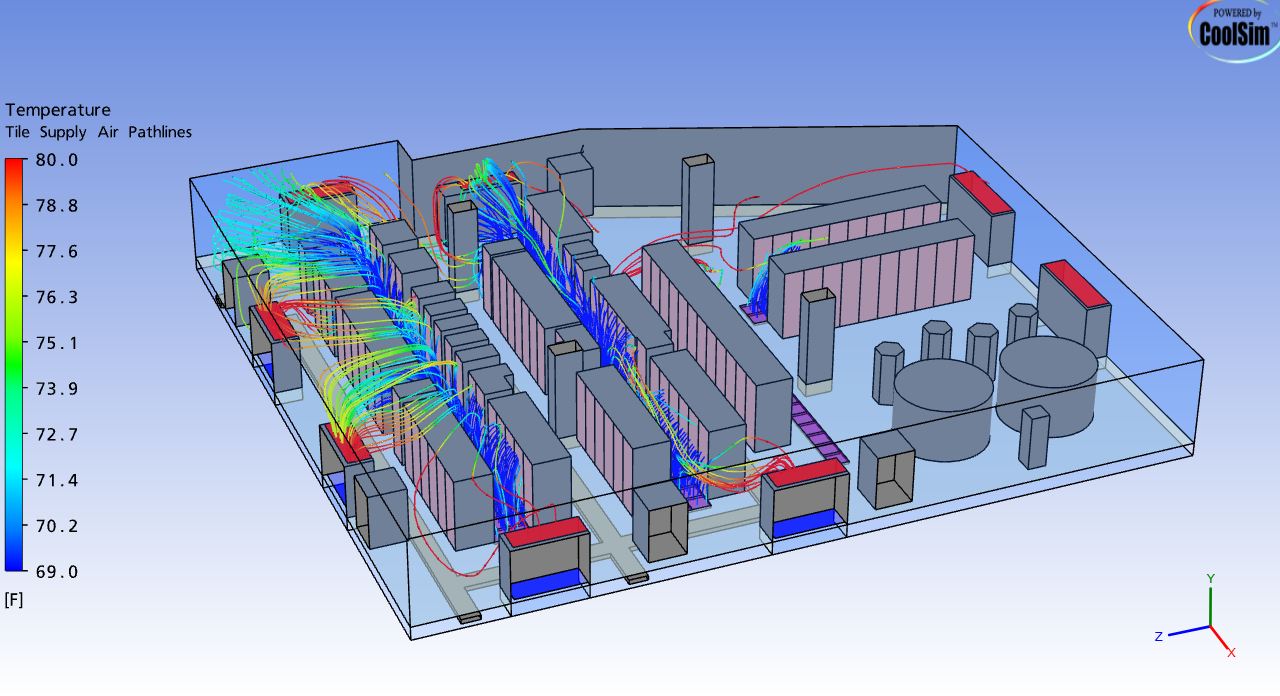

Pathlines from perforated floor tiles bypassing racks

Bypass air can be mitigated through cold aisle containment, while the non-uniform flow distribution through tiles can be addressed with placing more obstructive or permissive tiles accordingly to balance flow.

Internet & Colocation Expansion (2010s)

The explosion of digital services and cloud computing drove a new generation of multi-tenant colocation facilities. Tier classifications from the Uptime Institute became industry benchmarks, with N+1 and even N+2 cooling redundancies commonplace. Power efficiency became a growing concern, leading to metrics such as Data Center Infrastructure Efficiency (DCIE) and Power Usage Effectiveness (PUE) that quantify performance (average PUE hovered around 2.0). Rack densities increased to about 10 kW each, and raised-floor downflow CRAC units often supported over 800 racks in halls exceeding 3 MW of IT load.

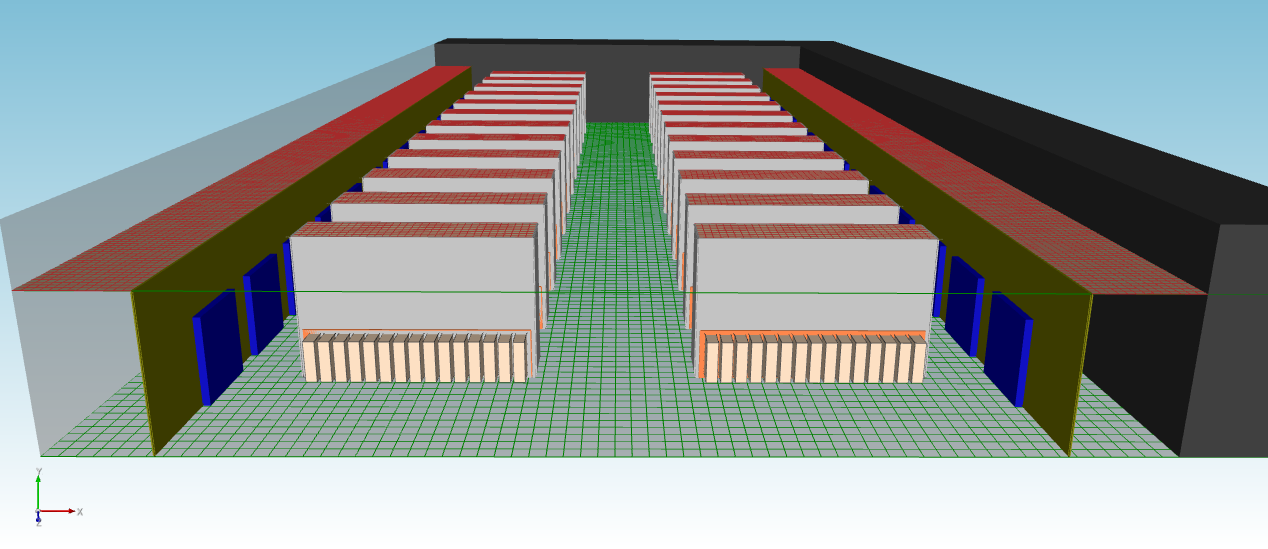

CoolSim model of a 3.2 MW raised floor room using hot aisle containment

Yet issues with underfloor airflow remain — vortices and non-uniform tile flow patterns persisted, demanding more sophisticated containment and airflow management.

Pathlines indicating swirling in the supply plenum

Non-uniform tile flow distribution

Hyperscale & Modular Infrastructure (2020s)

Leading hyperscale operators (e.g., Google, AWS, Azure) redefined scale and standardization. Data halls became modular, enabling rapid deployment and consistent performance. Cooling innovations included free-cooling designs, liquid-cooling adoption, and mandatory hot-aisle containment. Intelligent data-center infrastructure management (DCIM) tools provided real-time monitoring, crucial for supporting dense rack deployments exceeding 20 kW per rack (and in some cases peaking near 44 kW).

Fan wall, hot aisle contained design

Fan Wall Design Issues

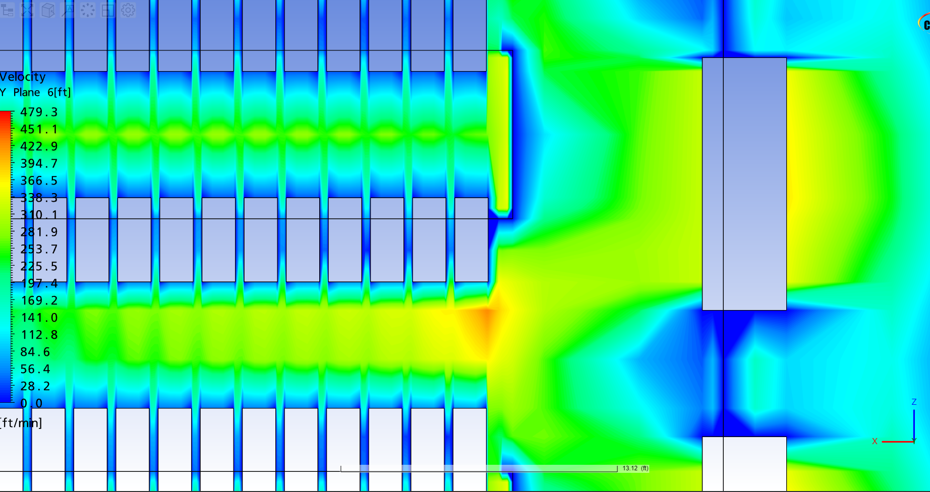

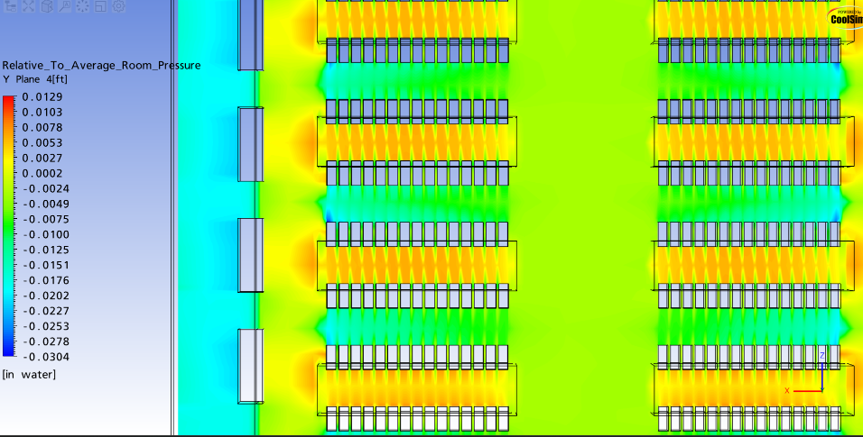

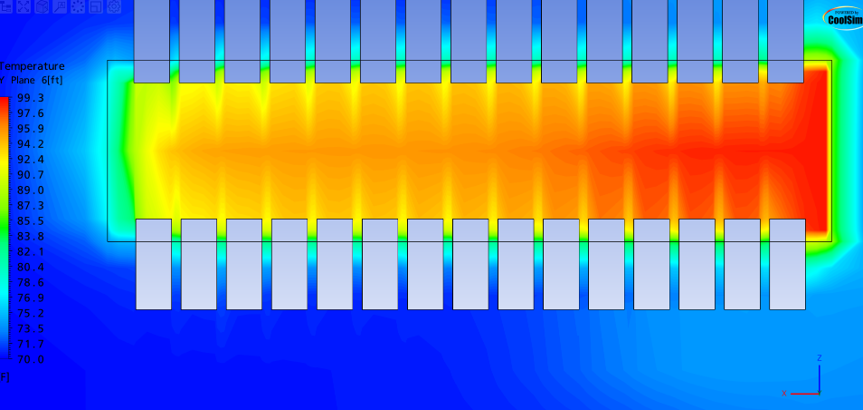

Given the size of hyperscale sites, the CRAC supply air moves with high velocity to ensure the center-most racks receive cool air. This induces a pressure differential on the leading edge of the rack pod, which can drive rack rail leakage:

Velocity contour plot at Y=6 ft

Pressure contour plot at Y=6 ft

Temperature contour plot at Y=6 ft

Modern Trends: Sustainability & Edge (2020s–Present)

The AI boom has redefined the data center landscape. Customers now expect infrastructure that accommodates AI workloads, driving increased power and cooling requirements.

Data center operators must balance this demand with increasing energy costs and new sustainability initiatives. Operators blend grid power with on-site renewables and shift compute-intensive jobs to periods when green electricity is plentiful.

Liquid cooling is reaching mainstream staus, with rear-door heat exchangers, direct-to-chip loops, and even immersion cooling showing presence in high power density sites.

Thousands of sensors feed predictive-maintenance software that spots problems early, so a lean operations team can effectively manage equipment throughout sprawling data halls. And by deploying smaller “edge” sites nearer to users, providers cut round-trip latency and relieve backbone networks, further trimming energy waste.

Future Outlook (2030s and Beyond)

Looking ahead, data-center infrastructure is poised to become fully autonomous and AI-optimized, striving for carbon-neutral or even carbon-negative operation. While quantum computing has not reached full maturity, planners may consider how advanced cryogenic cooling methods would be implemented at scale. The adoption of exterior heat dissipation strategies to ensure optimal chiller performance has begun, introducing

Conclusion

The evolution of data‑center design is a story of escalating density, efficiency, and complexity. Each architectural shift solved the limitations of its predecessor while introducing new airflow and thermal challenges—many of which were only fully understood through computational fluid dynamics (CFD) analysis.

As sustainability imperatives and AI continue to push the envelope, mastering airflow management, and embedding intelligence at every layer will define the next generation of digital infrastructure.